- Faster deployment: To keep pace with the competition levels and meet the evolving needs of the customers, companies today have to keep innovating their apps frequently. In many scenarios, however, deploying new app features can be extremely cumbersome and complex, even for large companies having a skilled IT team. Cloud-native computing makes this process much simpler. Being based on microservices, this system facilitates the deployment of new apps and features at a rapid pace. It allows developers to deploy new and advanced features within a day, without having to deal with any dependencies.

- Wider reach: Cloud-native technologies help companies to reach out to the global audience, expand their market reach, and increase their prospects. Low-latency solutions enjoyed by companies by going cloud-native allow them to seamlessly integrate their distributed applications. For modern video streaming and live streaming requirements, low latency is extremely important. This is where edge computing and Content Delivery Networks (CDNs) additionally shine, and brings data storage and computation closer to users.

- Leverage bleeding-edge technologies: Bleeding-edge services and APIs that were once available only to businesses having expansive resources are now made available to almost every cloud subscriber at nominal rates. These all-new cloud-native technologies are built to first cater to cloud-based systems and their discerning users.

- Quick MVP: Due to the elasticity of the cloud, anyone can do an MVP/POC and check their products across geographies.

- Leaner Teams: Owing to the reduced operational overheads, cloud teams are always leaner when it comes to dealing with cloud-native technologies.

- Easy scalability: Most legacy systems depended on plugs, switches, and various other types of tangible components and hardware. Cloud-native computing, on the other hand, is wholly based on virtualized software, where everything happens on the cloud. Hence, the application performance is not affected if the hardware capacity is scaled down or up, in this system. By going cloud-native, companies need not invest in expensive processors or storage for servers to meet their scalability challenges. They can just opt to reallocate the resources and scale up and down. All of it is done seamlessly without impacting the end-users of the app.

- Better agility: The reusability and modularity aspects of cloud-native computing makes it perfect for firms that are practicing agile and DevOps methodologies, to allow for frequent releases of new features and apps. Such companies also have to follow continuous delivery and integration (CI/CD) processes to launch new features. Provided that cloud-native computing makes use of microservices for deployment, developers can swiftly write and deploy code. It subsequently streamlines the deployment process, making the whole system much more efficient.

- Low-code development: With low-code development, developers can shift their focus from distinctive low-value tasks to high-value ones that are better aligned to their business requirements. It allows the developers to create a frontend of discerning apps quite fast and streamline workflow designs with the aim of accelerating the go-to-market time.

- Saves cost: Technologies that are a perfect fit for cloud-native:

- K8s: The declarative, API-driven infrastructure of Kubernetes, tends to empower teams to independently operate while focusing on important business objectives. It helps development teams to enhance their productivity levels while reducing the complexities and time involved in app deployment. Kubernetes plays a key role in enabling companies to enjoy the best of cloud-native computing, and avail the prime benefits offered by this system.

- Managed Kafka: With several Fortune 100 companies depending on Apache Kafka, this service has become quite entrenched and popular. As the cloud technology keeps expanding, a few chances are needed to make Apache Kafka truly cloud-native. Cloud-native infrastructures allow people to leverage the features of SaaS/ Serverless in their own self-managed infrastructure.

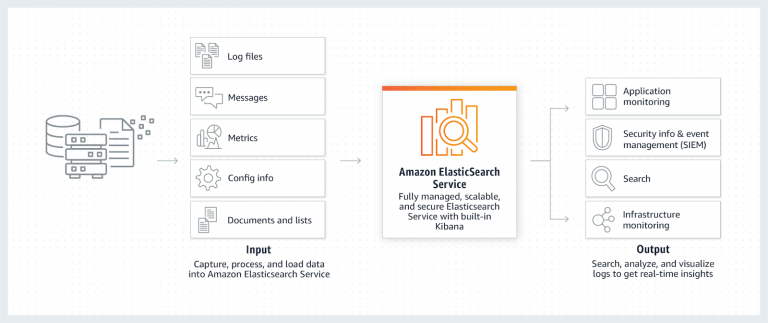

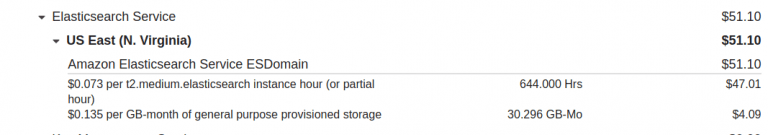

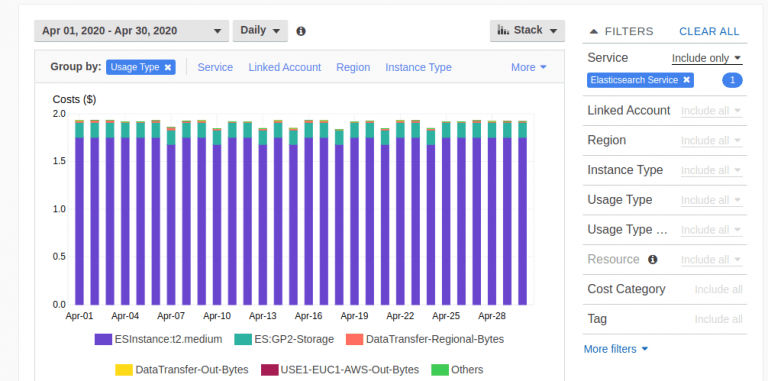

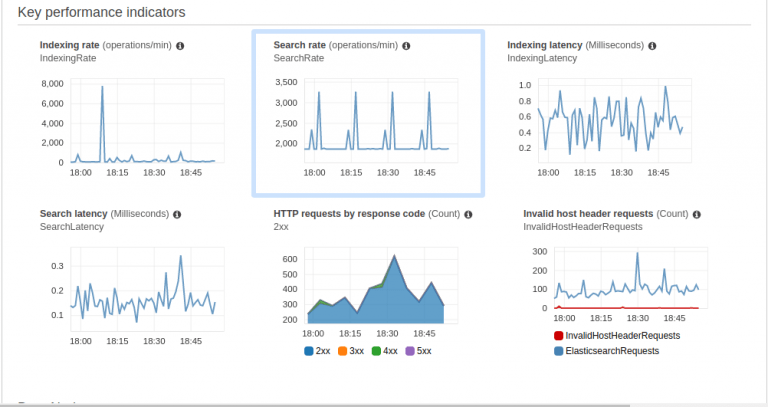

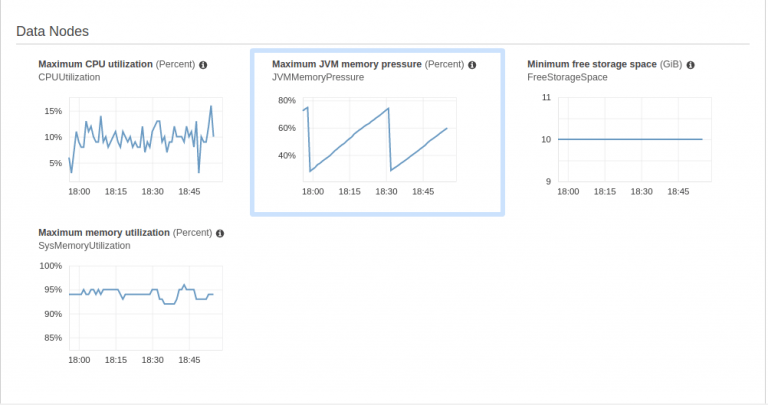

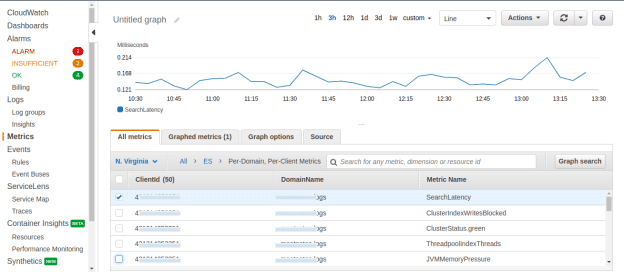

- ElasticSearch: Elasticsearch is a distributed search and analytics system that allows complex search capabilities across numerous types of data. For larger cloud-native applications that have complex search requirements, Elasticsearch is available as a managed service in Azure.

- ML/AI: The cloud enables firms to use managed ML to remove the burden on human resources. It reduces limitations in regards to data and ML, allowing all stakeholders to access the program and insights. AI takes the same approach on the cloud as machine learning but has a wider focus. Several firms today are able to deploy AI models and deep learning to the elastic and scalable environment of the cloud.

All companies, no matter their core business, have to embrace digital innovations to stay competitive and ensure their continued growth. Cloud-native technologies help companies enjoy an edge over their market competition and manage applications at scale and with high velocity. Firms previously constrained to quarterly deployments to important apps are now able to deploy safely several times in a day.