Data warehouse (DW) modernization has become extremely vital for modern businesses. It ensures timely access to the analytics and data needed for businesses to operate smoothly. To facilitate smart decision making for practitioners, especially in the manufacturing industry, data warehousing for OLAP (Online Analytical Processing) applications are used to provide a distinctive edge.

The traditional DW systems are often unable to cope up with the requirements of contemporary industries, resulting in several pain points. DW modernization hence is needed to effectively and swiftly meet the ever-evolving business environments, and rapidly iterate cutting-edge solutions, to provide adequate support for new data sources. The manufacturing industry especially involves a host of complex processes and huge investments, and to ensure the best possible outcomes, it is necessary to modernize the data warehousing system.

Modern DW featuring cloud-built data architecture helps companies support their current and future data analytics workloads, irrespective of the scale. The high flexibility offered by popular cloud platforms like AWS, empowers businesses to carry out various processes with their data in real-time, and comes as a boon for many modern sectors, including manufacturing.

Data warehousing and the manufacturing

Over the years, several companies belonging to the manufacturing industry have started to use DW to improve their operations and deliver enterprise-level quality. Data and analytics allow manufacturing firms to stay competitive and cater to the current market needs. Reports, dashboards, and analytics tools are used by manufacturing professionals to extract insights from data, monitor operations and performance, and facilitate business decisions. These reports and tools are powered by DWs that efficiently store data to reduce the input and output (I/O) of data and deliver query results in a swift manner.

While transactional systems are used to gather and store detailed data in manufacturing firms, DWs caters to their analytics and decision-making requirements. Modern manufacturing companies ideally have systems in place to control individual machines and automate production. Online Transaction Processing (OLTP) is optimized in order to facilitate swift data collection with feedback required for direct machine control.

For real-time feedback to be meaningful, it is imperative to have historical context. An advanced data warehouse would be required to provide this context. Real-time systems can use this historical data from the DW, along with current process measurements, to offer feedback that facilitates real-time decision support.

Pain points of legacy data

Data warehouse modernization is required to address a host of organizational pain points. Business agility tends to be among the prime goals of modern age organizations as they move towards digitizing their operations which is extremely hard to achieve with legacy tools. The key aim of modernizing the data warehouse environment of firms would be to improve their analytics functions, productivity, speed, scale, as well as, economics.

Below are some of the challenges with legacy data warehouses,

- Advanced analytics: Many organizations today are investing in online analytical processing or OLAP. However, with the legacy data warehousing environments, they are often unable to effectively use the advanced analytics forms, find new customer segments, efficiently leverage big data, and stay competitive.

- Management: Legacy infrastructure is quite complex, and hence companies often have to keep investing in hiring professionals in order to effectively manage these outdated systems, even if they are not advancing agility or data strategy. This may incur high unnecessary expenses for a business, which can be cut down using a modernized system.

- Support: There are many newer data sources that are not supported by the typical legacy data warehouses. These traditional solutions were not designed to handle the varying types of structured and unstructured data prevalent today, and hence can pose problems for many businesses. These issues can be solved by moving to more modernized technologies.

How can data warehouse modernization and AWS help?

DW modernization enables companies to be limitless when it comes to storing or managing data. They can scale gigabytes, terabytes, petabytes or even exabytes of data through these technologies. Through it, companies can easily scale their SQL analytics solutions.

Advanced data warehouses would enable companies to leverage the best possible practices to develop competitive business intelligence projects. DW modernization is especially useful in manufacturing and hi-tech industries, where broad diversity of data types and processing tends to be needed for the full range of reporting and analytics.

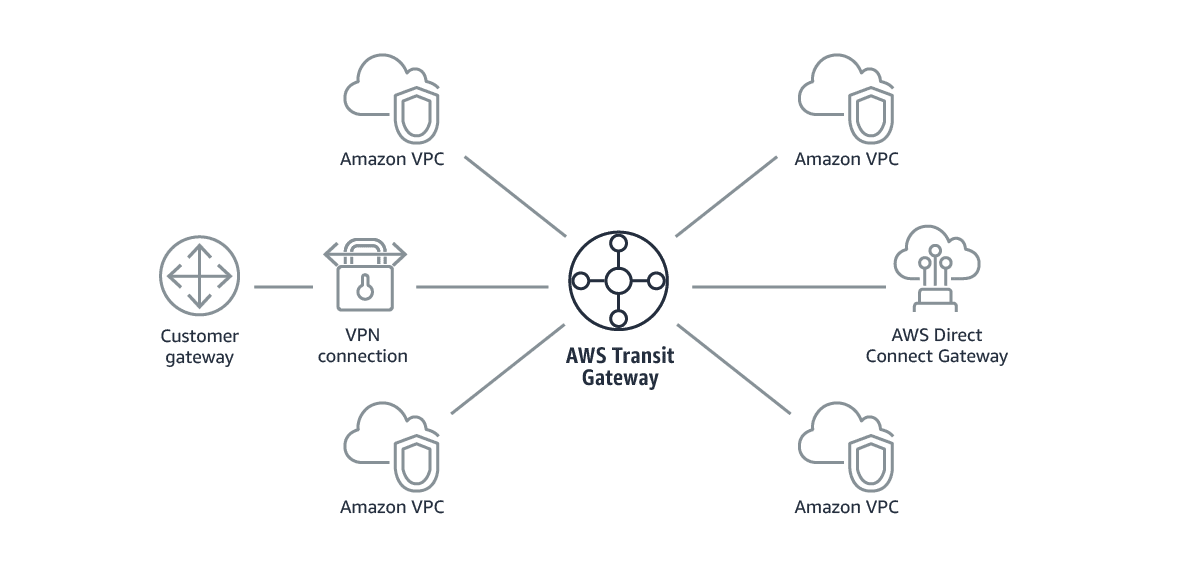

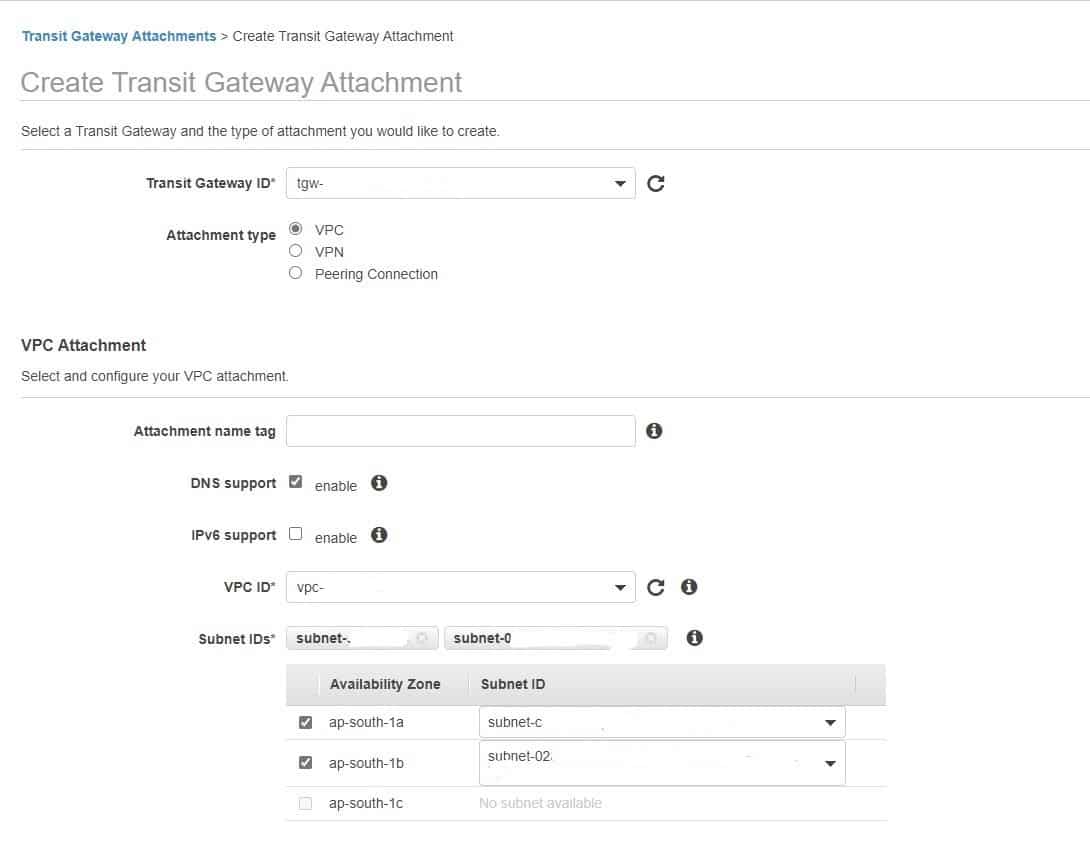

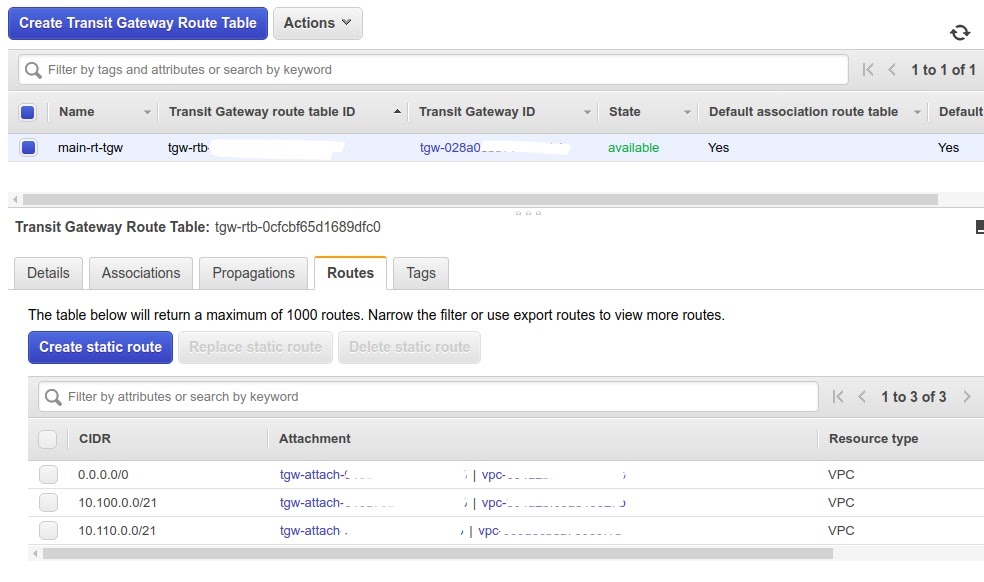

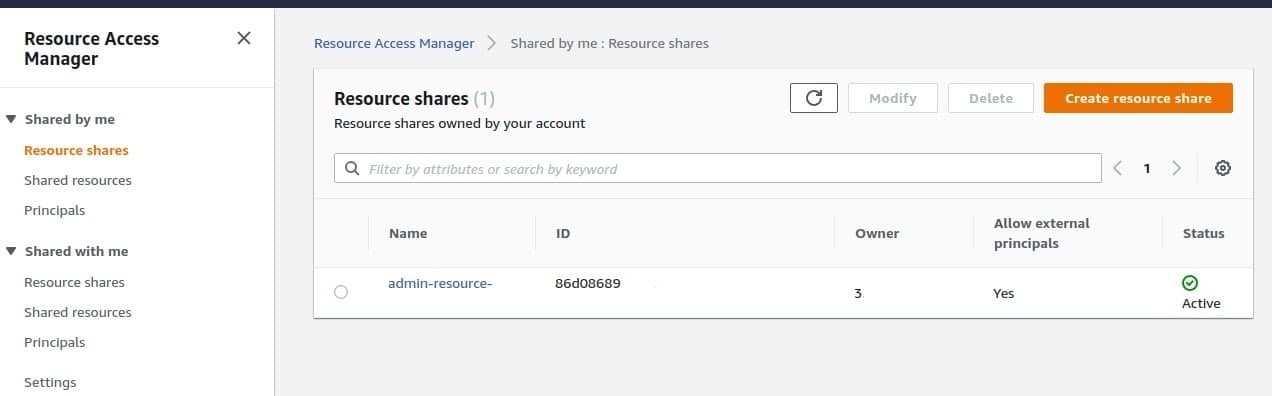

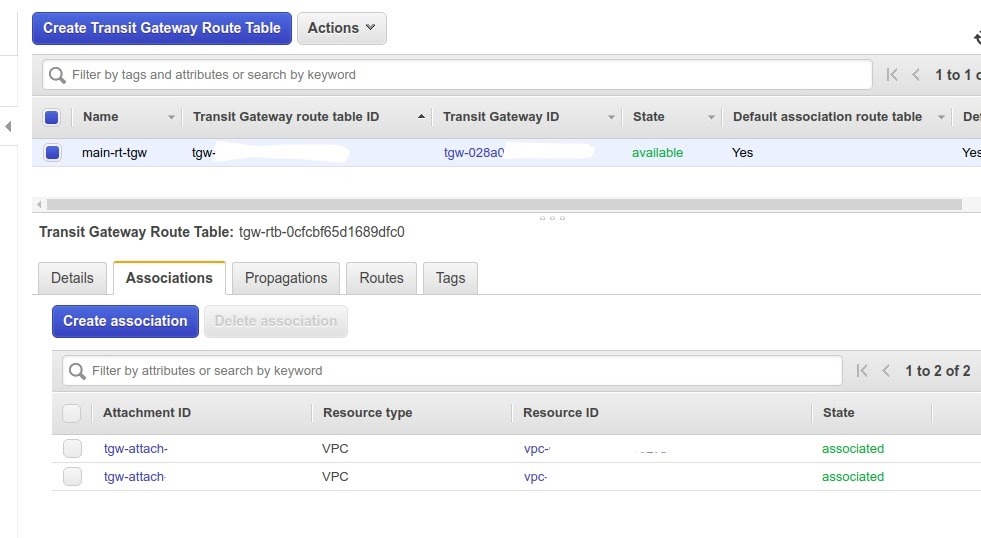

AWS and its APN Data and Analytics Competency Partners are renowned for offering a robust range of services for the implementation of the whole data warehousing workflow, including data warehousing analytics, data lake storage, and visualization of results. The data warehouse modernization platform of AWS facilitates faster insights at low expenses, ease of use with automated administration, high flexibility in analyzing both open and in-place formats, as well as, compliance and security for mission critical workloads.

AWS DW would help in:

- Swiftly analyzing petabytes of data and providing superior cost efficiency, scalability, and performance.

- Storing all data in an open format without having to move or transform it.

- Running mission-critical workloads even in highly regulated industries like manufacturing.

Data comes from a host of sources in contemporary data infrastructures. It includes sensor and machine-generated data, CRM and ERP, web logs, and numerous other types of industry-specific data sources. The majority of companies, including the manufacturing industry, face a lot of difficulties in just storing and managing these increasing volumes and formats of data, let alone carrying out analytics to identify patterns and trends on it. To solve this issue, data warehouse modernization has become a necessity. It allows businesses to swiftly meet the rapidly changing business requirements, provide the needed support for new data sources and promptly iterates new solutions. There are many platforms and tools available today that can help businesses to modernize their data warehouse, AWS solutions being among the most competent ones.

References

https://support.sas.com/resources/papers/proceedings/proceedings/sugi24/Emerging/p142-24.pdf

https://info.fnts.com/blog/5-common-business-challenges-of-legacy-technology

https://cloud.google.com/blog/products/data-analytics/5-reasons-your-legacy-data-warehouse-wont-cut-it

https://tdwi.org/articles/2014/05/20/data-warehouse-modernization.aspx

https://www.vertica.com/solution/data-warehouse-modernization/

https://aws.amazon.com/partners/featured/data-and-analytics/data-warehouse-modernization/#:~:text=AWS%20provides%20a%20platform%20for,compliance%20for%20mission%20critical%20workloads