As Tim Brown (the CEO of IDEO) beautifully puts “Design thinking is a human-centered approach to innovation that draws from the designer’s toolkit to integrate the needs of people, the possibilities of technology, and the requirements for business success.”

The importance of design has been constantly increasing over the years. The consumers of today’s generation have quick access to the global marketplaces. They do not distinguish between physical and digital experiences anymore.

This has made it difficult for companies to make their products or services stand out from the rest of the competitors.

Infusing your company with a design-driven culture that puts the customer first may not only provide real and measurable results but also give you a distinct competitive advantage.

All the firms that have embraced a design-driven culture have certain things in common.

First, these firms consider design more than a department. Firms that primarily focus on design tries to encourage all functions to focus more on their customers.

By doing this, they are also conveying a message that design is not a single department, in fact, design experts are everywhere in an organization working in cross-functional teams and having constant customer interaction.

Second, for such companies, the design is much more than a phase. Popular design-driven companies use both qualitative and quantitative research during the early product development phase, bringing together techniques such as ethnographic research and in-depth data analysis to clearly understand their customers’ needs.

Why Design Thinking?

Thinking like a designer can certainly transform the way companies develop products/services, strategies, and processes.

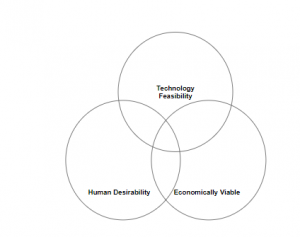

If companies can bring together what is most desirable from a human point of view with what is technologically feasible and also economically viable, they can certainly transform their businesses.

This also gives an opportunity to people who are not trained as designers to utilize creative tools to tackle a range of challenges.

Design Thinking Approach

Empathize: Understand your users as clearly as possible and empathize with them.

Define: Clearly define the problem that needs to be sorted and bring out a lot of possible solutions.

Ideate: Channel your focus on the final outcomes, not the present constraints.

Prototype: Use prototypes for exploring possible solutions.

Test: Test your solutions, reflect on the results, improvise the solution, and repeat the process.

(Recently, we had Mr. Ashish Krishna (Head of Design – Prysm) addressing our interns about the same Design Thinking Approach )

Facts that prove the importance of having a Design Thinking Approach

Some years ago, Walmart had revamped its e-commerce experience, and as a result, the unique visitors to its website increased by a whopping 200%. Similarly, When BOA (Bank of America) undertook a user-centered design of its process for the account registrations, the online banking traffic shot up by 45%.

In a design-driven culture, firms are not afraid to launch a product that is not totally perfect, which means, going to market with an MVP (minimally viable product), learn from the customer feedback, incorporate the same, and then build and release the next version of the product.

A classic example of this is Instagram, which launched a product, learning which features were most popular, and then re-launching a new version. As a result, there were 100,000 downloads in less than a week.

ROI from Design

Let’s take a look at some examples of how design impacted the ROI of companies.

The Nike – Swoosh, which is one of the most popular logos across the globe, managed to sell billions of dollars of merchandise through the years. The icon was designed in the year 1971 and at that time the cost was only $35. However, after almost 47 years, that $35 logo evolved into a brand, which Forbes recently estimated to be worth over $15 billion.

Some years back the very popular ESPN.com received a lot of feedback from users for their cluttered and hard to navigate homepage. The company went ahead and redesigned their website, and as a result, the redesign garnered a 35% increase in their site revenues.

Some Benefits of having a Design Thinking Approach

Helps in tackling creative challenges: Design thinking gives you an opportunity to take a look at problems from a completely different perspective. The process of design thinking allows you to look at an existing issue in a company using creativity.

The entire process will involve some serious brainstorming and the formulation of fresh ideas, which can expand the learner’s knowledge. By putting design thinking approach to use, professionals are able to collaborate with one another to get feedback, which thereby helps in creating an invaluable experience to end clients.

Helps in effectively meeting client requirements: As design thinking involves prototyping, all the products at the MVP stage will go through multiple rounds of testing and customer feedback for assured quality.

With a proper design thinking approach in place, you will most likely meet the client expectations as your clients are directly involved in the design and development process.

Expand your knowledge with design thinking: The design process goes through multiple evaluations. The process does not stop even after the deliverable is complete.

Companies continue to measure the results based on the feedback received and ensure that the customer is having the best experience using the product.

By involving oneself in such a process, the design thinkers constantly improve their understanding of their customers, and as a result, they will be able to figure out certain aspects such as what tools should be used, how to close the weak gaps in the deliverable and so on.

Conclusion

If we take a closer look at a business, we will come to a realization that the lines between product/services and user environments are blurring. If companies can bring out an integrated customer experience, it will open up opportunities to build new businesses.

Design thinking is not just a trend that will fade away in a month. It is definitely gaining some serious traction, not just in product companies, but also in other fields such as education and science.