Azure Container Instance (ACI) is the simplest and quickest option to create and run containers in Azure. It is serverless and is best suitable for single-container deployments that don’t need to integrate with other Azure services (isolated containers).

Some of the features of ACI are as follows:

- Serverless: Run the containers without managing servers

- Fast startup: Launch containers in seconds.

- Billing per second: Incur costs only while the container is running. No upfront or terminations fees

- Hypervisor-level security: Isolate your application as completely as it would be in a VM.

- Custom sizes: Specify exact values for CPU cores and memory.

- Persistent storage: Mount Azure Files are shared directly to a container to retrieve and persist state.

- Linux and Windows: Schedule both Windows and Linux containers using the same API.

Since it has a billing per second model, it is not recommended for applications that need to be run at 24X7. Instead, it is a good option for the Event-driven applications, Data processing jobs, provisioning of CI-CD pipelines, etc.

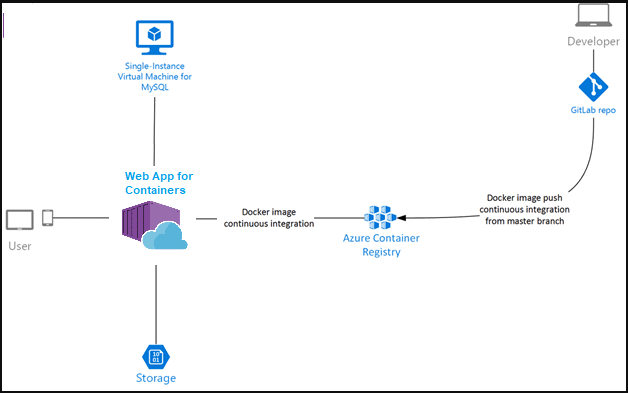

Azure Web Apps is a wonderful option for creating and hosting mission critical HTTP based applications in Azure. It supports languages like .Net, .NET Core, JAVA, Ruby, Python, Node.js, PHP. This makes Azure Web Apps also a good platform for deploying containerized web applications to Azure.

In this approach, the container image is pushed to a Docker Hub or a private Azure Container Registry using CI/CD pipelines and is deployed to Azure Web Apps.

Note: Azure Container Registry is Microsoft’s solution for securely storing the container images.

Some of the features of Azure Web Apps for Containers are

- Deployment in Seconds

- Easy integration with other Azure services

- Supports auto-scaling, staging slots, and automatic deployment

- Manage environment variables

- Rich monitoring experiences

- Enhances security features like configuring SSL certificates, IP whitelisting, AD Authentication

- Helps enable CDN

- Ability to host windows and Linux based containers.

Azure Batch helps to run a large set of batch jobs on Azure. With this approach, the batch jobs are run inside the Docker compatible containers on the nodes. This is a good option for running and scheduling repetitive computing jobs.

Azure Service Fabric is a container orchestrator for managing and deploying microservices in Azure. This is the most mature platform for deploying windows based containers so that Microsoft itself uses Service Fabric for its own products like CosmosDB, Azure SQL Database, Skype for Business, Azure IoT Hubs, Azure Event Hubs, etc.

With this, the microservices are deployed across a cluster of machines. The individual machines in the cluster are known as nodes. A cluster can have both Windows and Linux based nodes.

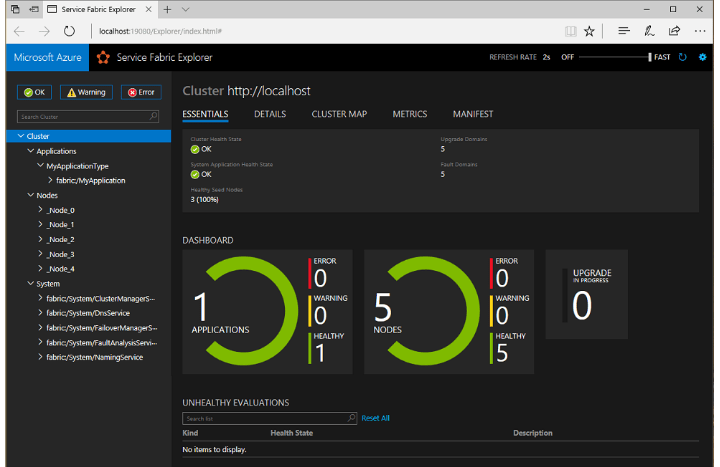

Service Fabric Explorer provides a rich and powerful dashboard for continuously monitoring the microservices across all the clusters and its nodes.

The key features of Service Fabric are as follows:

- Has a container Orchestrator Platform which can run anywhere – Windows/Linux, On-Premise or other clouds

Scales up to thousands of machines - Runs anything, depends on your choice of a programming model

- Deployment in Seconds and IDE integration

- Service to Service communication

- Recovery from hardware failures, Auto Scaling

- Supports Stateful and Stateless services.

- Security and Support for Role-Based Access Control

Service Fabric Mesh provides a more simple deployment model which is completely serverless. Unlike Service Fabric, with Service Fabric Mesh there is no overhead of managing clusters and patching operations. It is Microsoft’s SaaS offering based on Service Fabric and runs only in Azure.

Service Fabric Mesh supports both Windows and Linux containers with any programming language and framework.

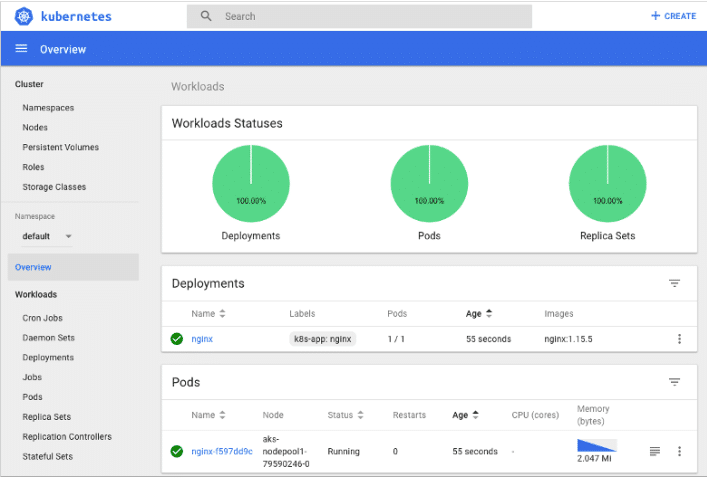

Azure Kubernetes Services is a managed cluster with a Kubernetes orchestrator in Azure. The Kubernetes master is fully managed by Azure and free. We only need to manage and pay for the worker nodes and any other infrastructure resources like storage and networking.

Some of the key features of AKS are as follows:

- Ability to orchestrate applications without the operational overhead

- Identity and Security Management with the integration of Azure AD

- Integrated logging and monitoring

- Elastic Scale

- Support for version upgrades

- Simplified deployment

- Security and support for Role-Based Access Control

Azure also provides AKS Dashboard which helps to manage the Azure Kubernetes Services.

In addition to all the above services, there is also Azure Red Hat OpenShift which is a fully managed deployment of OpenShift on Azure developed by both Microsoft and Red Hat.

Azure Offering

| Azure Offering | Use Case |

| Azure Container Instance | For bursting work load |

| Azure Web Apps for Containers | For hosting small web applications. |

| Azure Batch | For running batch jobs |

| Azure Service Fabric | Development of Windows based Microservices or for any legacy .Net based systems. |

| Azure Kubernetes Services | Complex distributed applications which need to have simple deployments and efficient use of resources |